Project Overview

Dataset Analysis

The bird species dataset contains diverse images across 25 different bird species with the following characteristics:

1,352

Total Images

1,227

Training Images

125

Testing Images

Each image was standardized to 224×224 pixels with 3 color channels. The data preparation process involved transforming raw bird images into a format suitable for deep learning, followed by data normalization to improve model performance.

# Data transformation pipeline transform = transforms.Compose([ transforms.Resize((224, 224)), transforms.ToTensor(), transforms.Normalize((.229, .224, .225), (.485, .456, .406)) # ImageNet normalization ])

Model Architecture Comparison

I implemented and compared three popular CNN architectures, each with different depths and architectural characteristics:

AlexNet

The winner of the 2012 ImageNet challenge with 8 layers. Used as our baseline model for comparison.

- 8 layers total (5 convolutional, 3 fully connected)

- Total training time: 437.42 seconds

- Best optimizer: RMSprop

- Best epoch: 14

- Best performance (loss): 0.000859

VGGNet

Winner of the 2014 ImageNet challenge with 16 layers and small 3×3 filters throughout.

- 16 layers (13 convolutional, 3 fully connected)

- Total training time: 379.21 seconds

- Best optimizer: Adam

- Best epoch: 13

- Best performance (loss): 0.000247

ResNet

The 2015 ImageNet challenge winner with 152 layers and special skip connections.

- 152 layers with residual connections

- Total training time: 502.46 seconds

- Best optimizer: RMSprop

- Best epoch: 11

- Best performance (loss): 0.001461

Optimization Methods

A key aspect of this project was evaluating different optimization algorithms to find the most effective approach for each model. I tested three optimizers:

Adam

Adaptive Moment Estimation combines benefits of AdaGrad and RMSProp.

- Best for: VGGNet

- Achieved lowest overall loss (0.000247)

- Quick convergence in early epochs

- Consistent performance after initial convergence

SGD

Stochastic Gradient Descent with momentum.

- Most stable training curves

- Slower convergence than other optimizers

- ResNet with SGD showed minimal standard deviation

- Steady improvement throughout all epochs

RMSprop

Root Mean Square Propagation adapts learning rates based on recent gradients.

- Best for: AlexNet and ResNet

- Fastest convergence for ResNet

- ResNet achieved 100% test accuracy

- Some fluctuations in later epochs

# Optimizer configuration example for ResNet with RMSprop

learning_rate = 1e-4

optimizer = torch.optim.RMSprop(cnn_model.parameters(), lr=learning_rate)

loss_fn = nn.CrossEntropyLoss()

Performance Visualizations

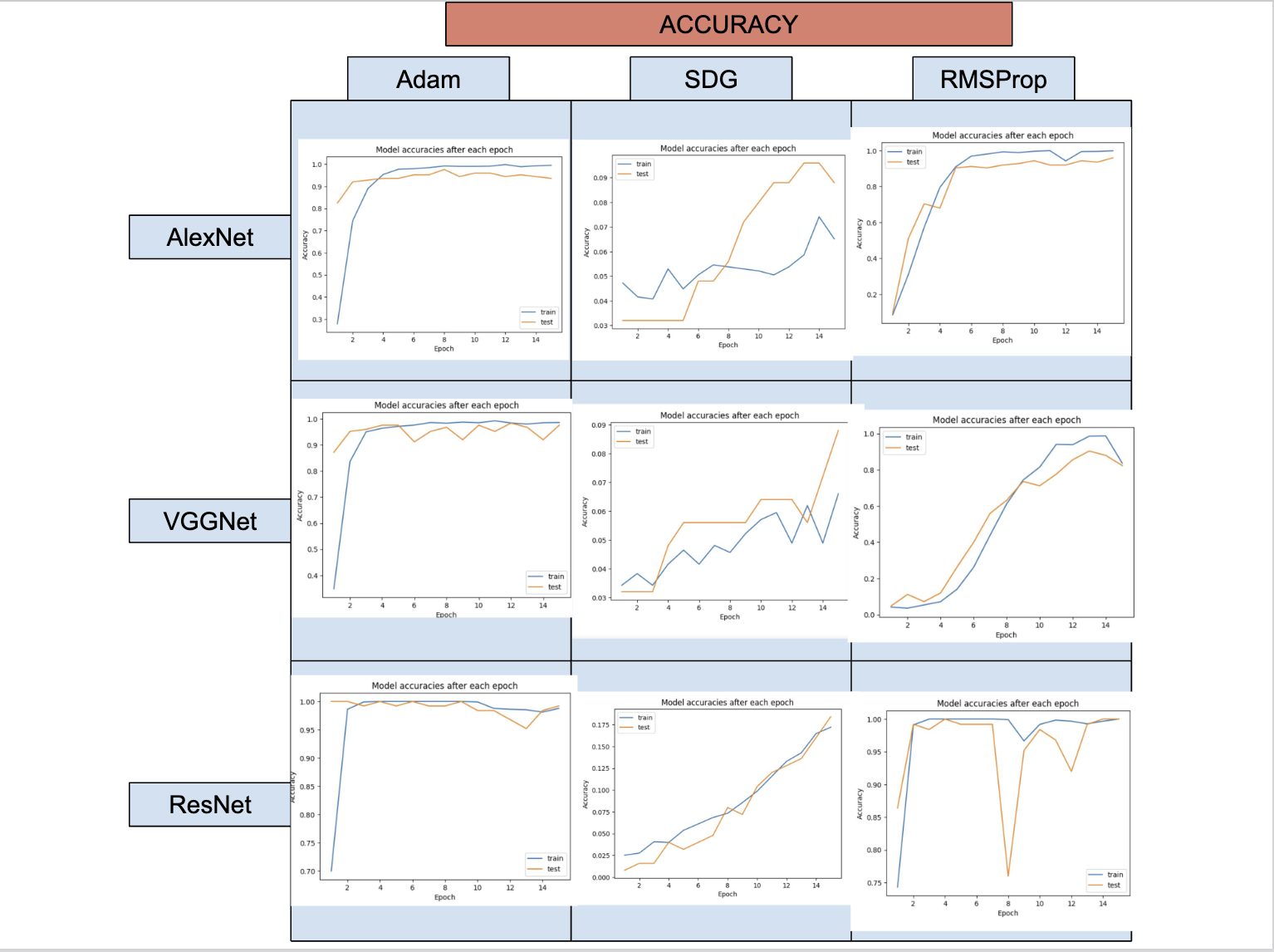

Accuracy Comparison

Accuracy curves for AlexNet, VGGNet and ResNet across different optimizers showing training and test accuracy over epochs.

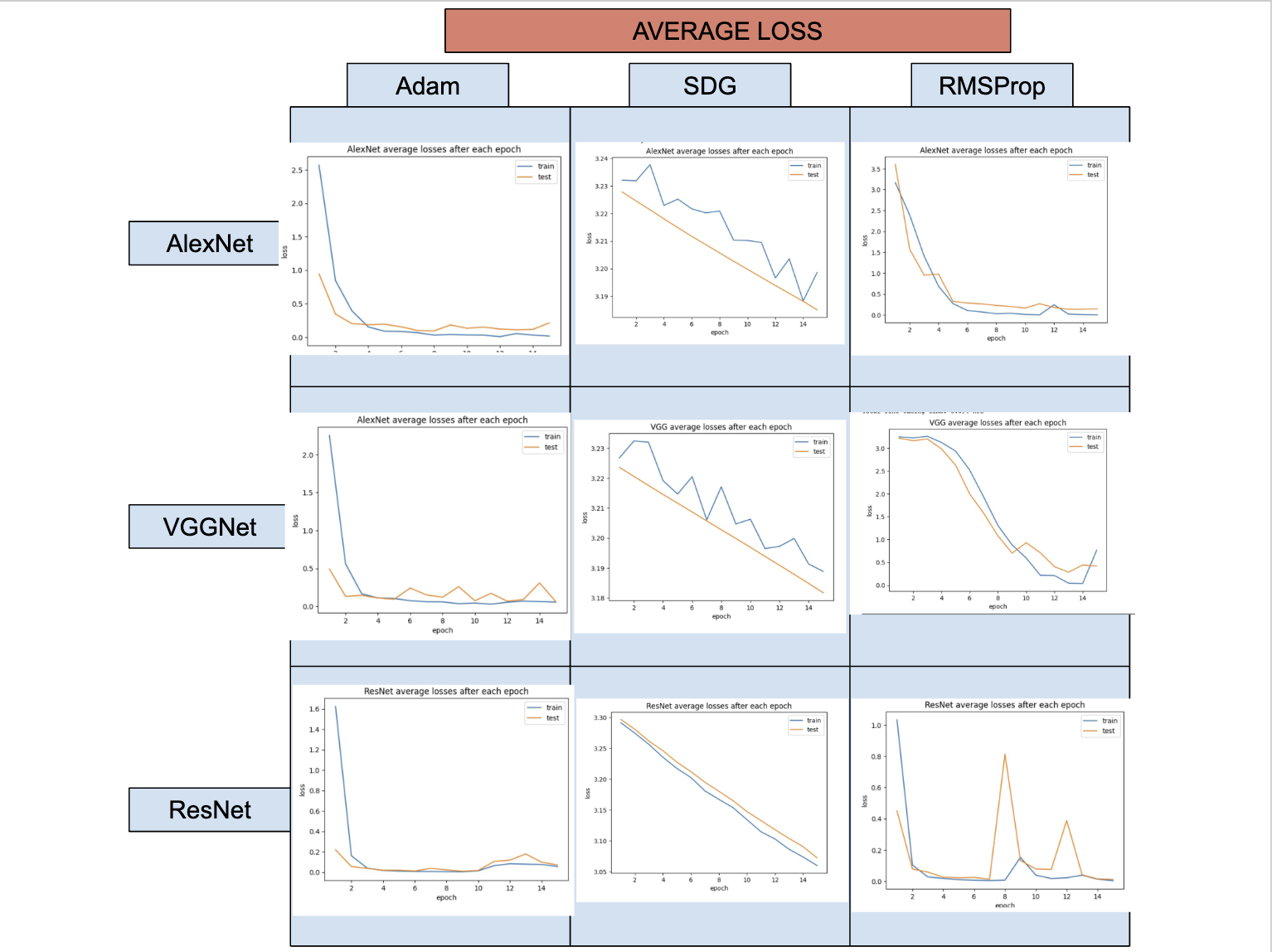

Loss Curves

Training and testing loss curves showing convergence patterns for each model-optimizer combination.

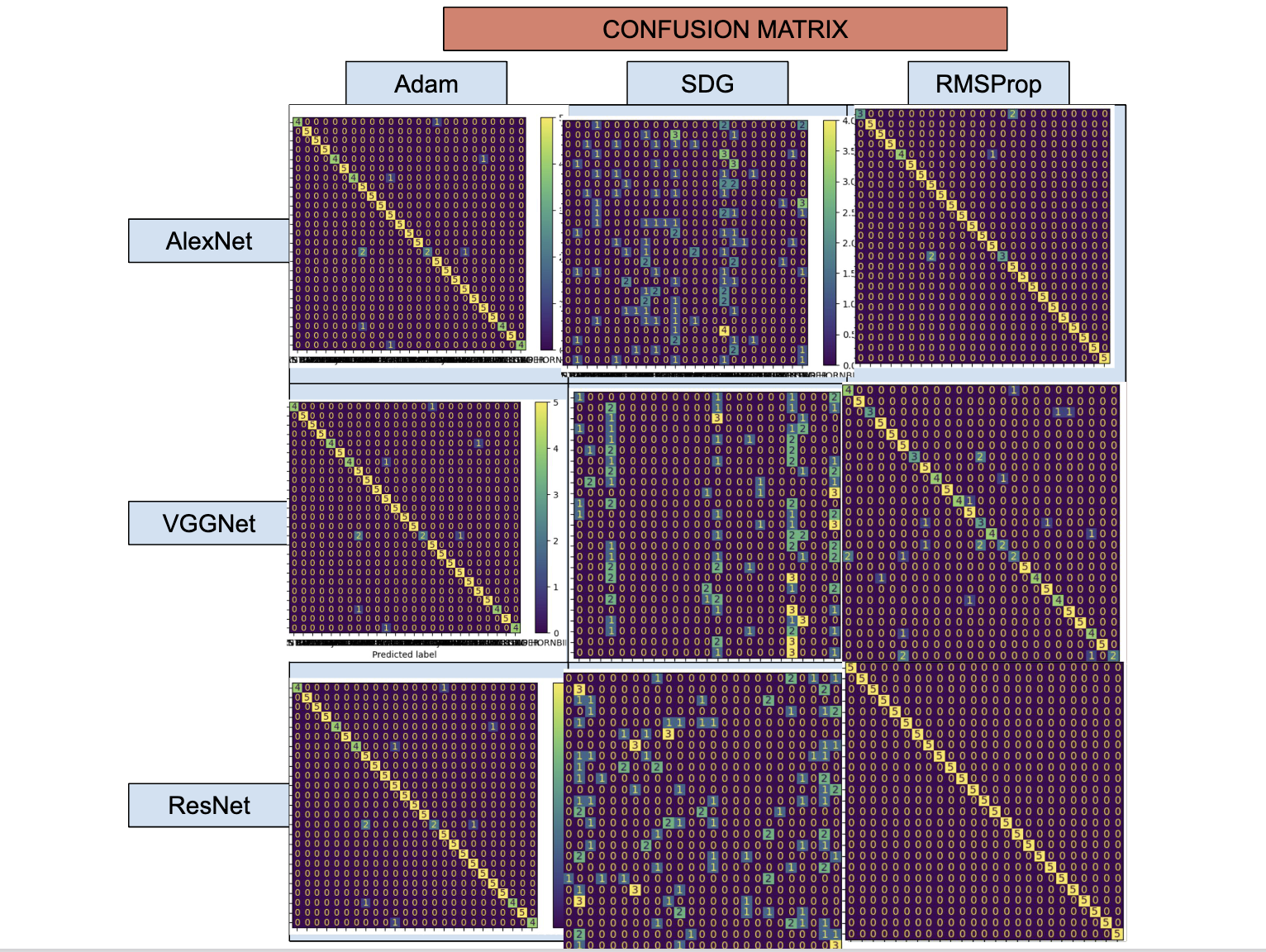

Confusion Matrices

Confusion matrices visualizing classification performance across all 25 bird species.

Implementation Details

All models were implemented using PyTorch and fine-tuned from pre-trained weights on ImageNet. Each model underwent architectural modifications to classify our 25 bird species:

ResNet Implementation Example

class ResNet152(nn.Module):

def __init__(self, num_classes, pretrained=True):

super(ResNet152, self).__init__()

net = models.resnet152(pretrained=True)

# Modify final layer for our classification task

num_features = net.fc.in_features

net.fc = nn.Linear(num_features, num_classes)

# Transfer components from pre-trained model

self.conv1 = net.conv1

self.bn1 = net.bn1

self.relu = net.relu

self.maxpool = net.maxpool

self.layer1 = net.layer1

self.layer2 = net.layer2

self.layer3 = net.layer3

self.layer4 = net.layer4

self.avgpool = net.avgpool

self.fc = net.fc

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

Challenges and Solutions

Implementation Challenges

- Test Loop Evaluation: Fixed the test_loop() function to correctly track predictions and calculate confusion matrices.

- Optimization Testing: Created separate notebooks for each optimizer type to isolate and compare performance.

- Memory Management: Adjusted batch sizes and implemented efficient data loading to handle high-resolution images.

- Hyperparameter Tuning: Systematic testing to find optimal learning rates for each model-optimizer combination.

Learning Process

Throughout this project, I gained valuable insights into:

- Transfer learning techniques for computer vision tasks

- Implementing and fine-tuning state-of-the-art CNN architectures

- Impact of different optimizers on model convergence and performance

- Effective evaluation metrics for multi-class image classification

- Managing computational resources for deep learning projects

Key Findings and Conclusions

Performance Summary

After comprehensive testing across all model-optimizer combinations, these were the key findings:

- VGGNet with Adam achieved the lowest overall loss (0.000247)

- ResNet with RMSprop achieved 100% test accuracy on certain runs

- All models showed rapid improvement in the first 3-4 epochs

- VGGNet was the fastest to train (379.21s)

- ResNet took the longest (502.46s) but delivered exceptional accuracy

- Minimal misclassifications across models, with particularly strong performance from ResNet

Final Verdict

Based on my research, I found that ResNet with RMSprop is the most effective combination for bird species classification, achieving:

- Training Accuracy: 99.10%

- Test Accuracy: 98.40% (reaching 100% in optimization testing)

- Excellent generalization to new examples

- Strong performance across all 25 bird species

- ResNet's architecture, despite being the most complex, proved most effective at distinguishing subtle differences between similar bird species