Internship Overview

Projects

3 Projects Over 12 Weeks

18031-1 Documentation

Testing Guide to guide the lab and new members unfamiliar with the process to understand how to test to 18031-1 (Internet-Connected Radio Equipment)

18031-2 Documentation

Testing Guide to guide the lab and new members unfamiliar with the process to understand how to test to 18031-2 (Personal Data Processing Equipment)

Local LLM Trial Run

Created a fine-tuned LLM at a simplified scale to trial run the concept of a local LLM for lab usage as an assistant to aid with documentation and testing procedures.

EU Radio Equipment Directive (RED) Overview

About 18031 / EU RED

18031 is also known as The EU Radio Equipment Directive (RED). All companies who sell in the EU (if applicable) must conform to RED by August 1st on each product or risk the ability to sell that product. The directive is broken down into three main categories:

18031-1

Internet-Connected Radio Equipment

Applies to: All radio equipment capable of Internet communication, whether directly or via another interconnected device.

18031-2

Personal Data Processing Equipment

Applies to: Devices handling personal data i.e. Internet connected devices, childcare equipment, wireless toys and wearable devices.

18031-3

Financial Transaction Equipment

Applies to: Equipment that processes payments, virtual currencies, or other financial transactions. (18031-3 was not a part of my 3 projects)

Product Example

Across this document an example product will be used as a demo item to test against 18031-1 documentation.

The example product that will be used is an IoT home device hub which allows users to connect to on the local network. The device assets are:

| Asset Type | Asset Description | Possible Access | Public Access | Justification for Public Access | Environmental Restrictions |

|---|---|---|---|---|---|

| Security Asset | Admin passwords | Read, Write | No | N/A | Restricted admin interface |

| Security Asset | Cryptographic keys & certificates | Read, Write | No | N/A | Secure storage only |

| Security Asset | Firmware update files | Read, Execute | No | N/A | Secure update server |

| Network Asset | Wi-Fi configuration | Read, Write | No | N/A | Secured VLAN |

| Network Asset | VPN settings | Read, Write | No | N/A | Dedicated management VLAN |

| Network Asset | Remote management interfaces (SSH) | Read, Write, Execute | No | N/A | Admin-only access |

| Network Asset | Bluetooth communication | Read, Write | Possibly Yes | For pairing or data exchange | Pairing codes, encryption, whitelisting |

| Network Asset | Webserver interface (UI) | Read, Write, Execute | Possibly Yes | For user access to UI | TLS encryption, authentication |

| Admin Function | User role management | Read, Write | No | N/A | Admin dashboard only |

| Admin Function | System logs and audit trails | Read | No | N/A | Secure storage |

| Admin Function | Device provisioning | Execute | No | N/A | Factory or secure onboarding |

Project 1: 18031-1 Documentation

18031-1 Requirements

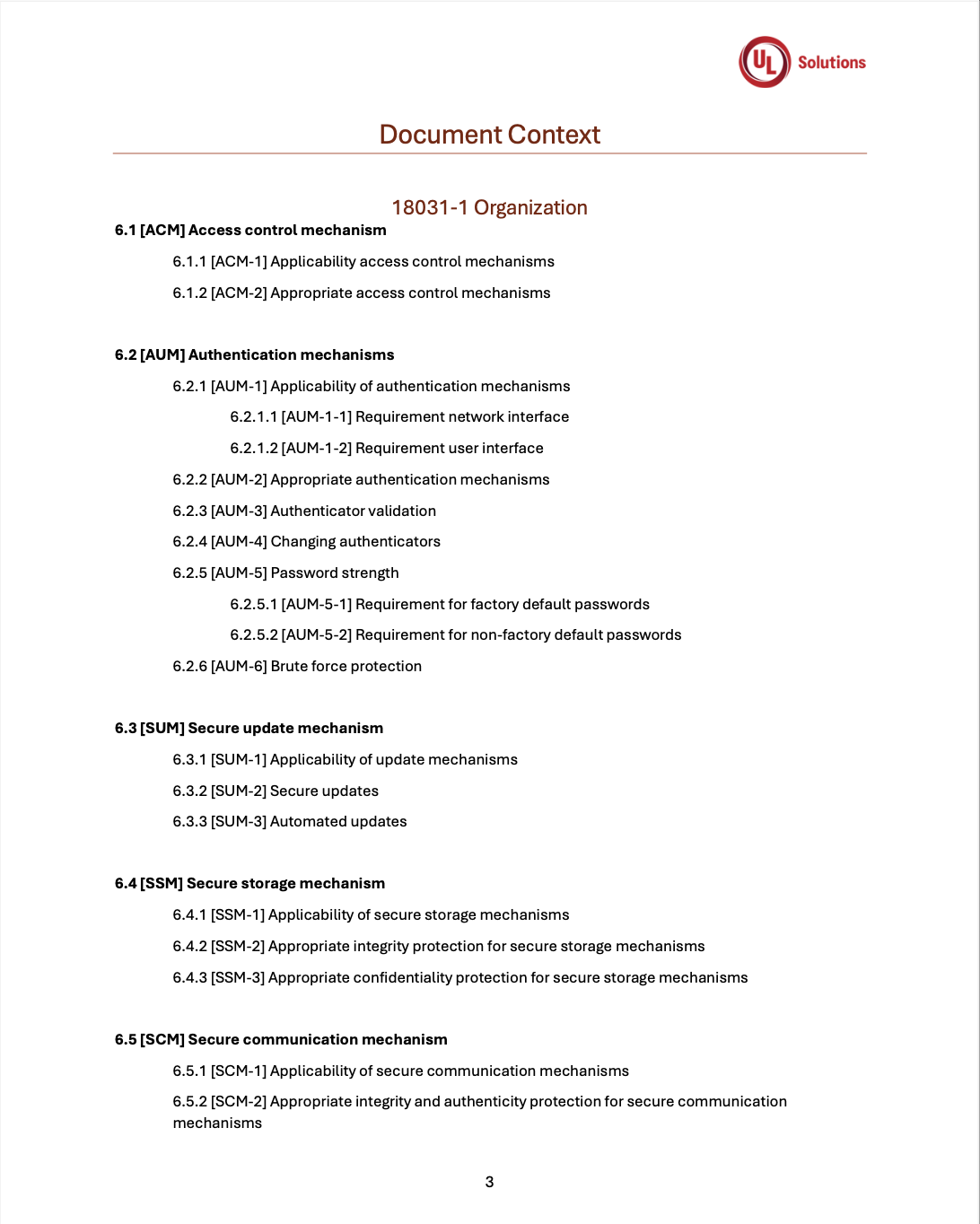

18031-1 is broken up into core requirements with sub requirements

All most all requirements require physical testing rather than checking documentation

- 6.1 [ACM] Access control mechanism

- 6.2 [AUM] Authentication mechanisms

- 6.3 [SUM] Secure update mechanism

- 6.4 [SSM] Secure storage mechanism

- 6.5 [SCM] Secure communication mechanism

- 6.6 [RLM] Resilience mechanism

- 6.7 [NMM] Network monitoring mechanism

- 6.8 [TCM] Traffic control mechanism

- 6.9 [CCK] Confidential cryptographic keys

- 6.10 [GEC] General equipment capabilities

- 6.11 [CRY] Cryptography

18031-1 Organization

The documentation follows a structured approach to guide lab technicians through compliance testing:

- Document Context: Overview of the 18031-1 standard structure

- Requirement Hierarchy: Core requirements broken down into testable sub-requirements

- Testing Procedures: Step-by-step guides for each requirement

- Asset Categorization: Security assets, network assets, and admin functions

- Compliance Verification: Methods to confirm requirement satisfaction

Each requirement includes detailed sub-requirements with specific testing criteria and expected outcomes.

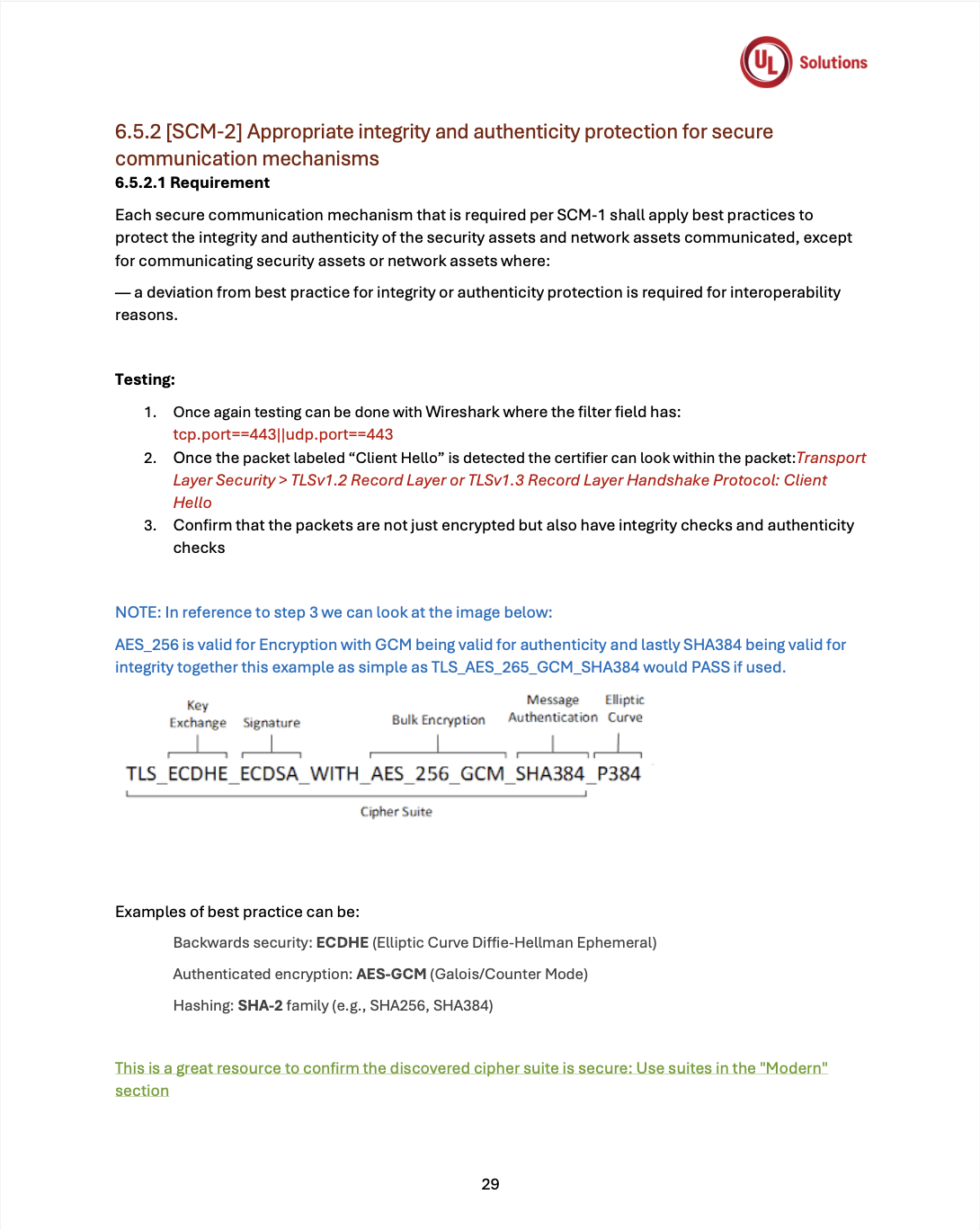

Example Requirement Breakdown

Requirement

6.5.2 [SCM-2]

Secure Communication Mechanism

Protect the integrity and authenticity of assets

Testing

Testing steps

Notes on what to look for

References for what is deemed secure

Note: RED often states "best practice" and leaves it up to the certifier to deem what is "best practice" The green text on the left image is a link to Cloudflare publication, of which I deemed to be valid for best practice if the product uses a cipher suite within "Modern".

Key Accomplishments

- Developed comprehensive testing documentation for lab testing procedures on an example device

- Created detailed asset inventory categorizing Security Assets, Network Assets, and Admin Functions

- Established testing protocols for requirements that primarily require physical testing rather than documentation review to conform as close as possible to true 18031 compliance

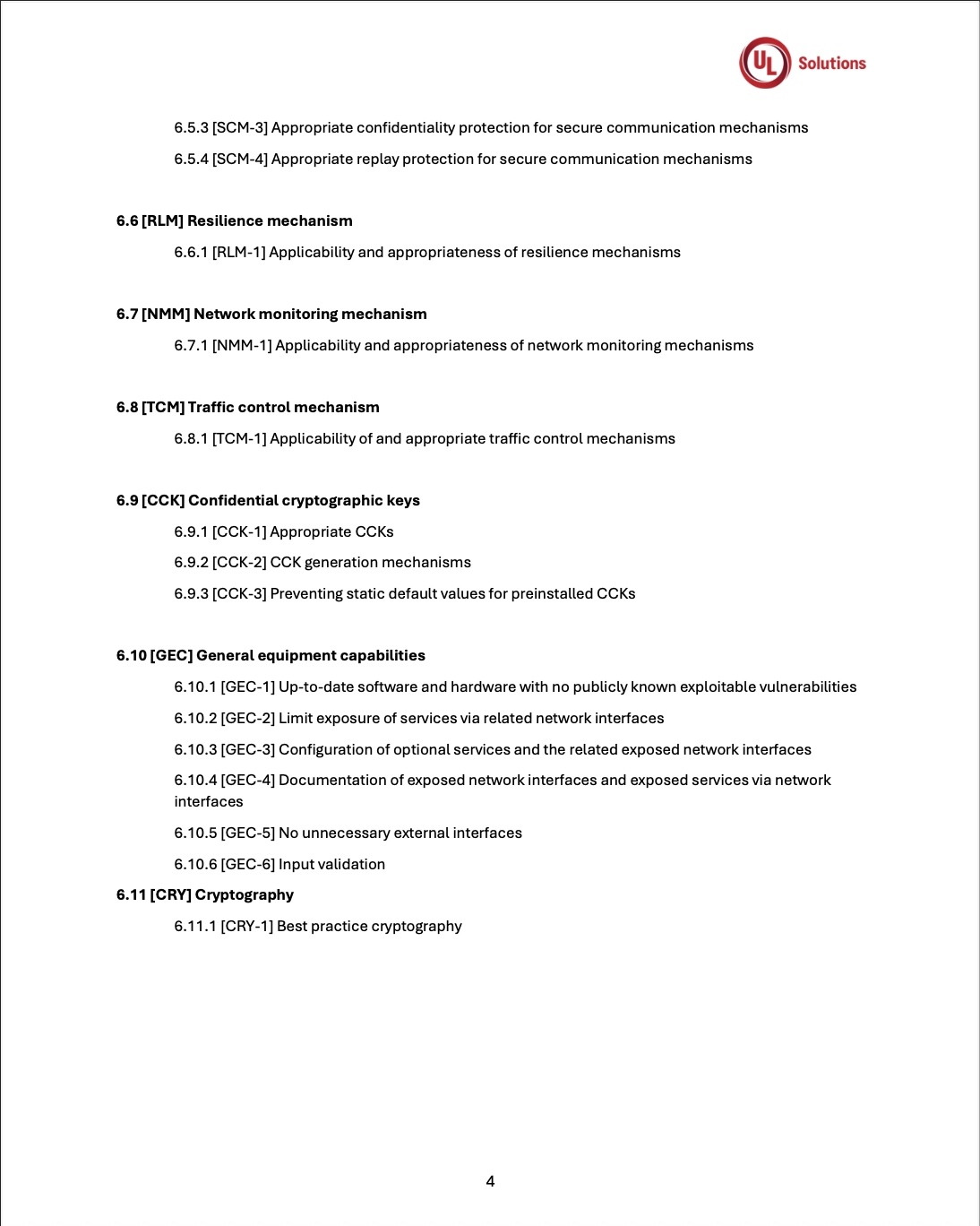

Project 2: 18031-2 Documentation

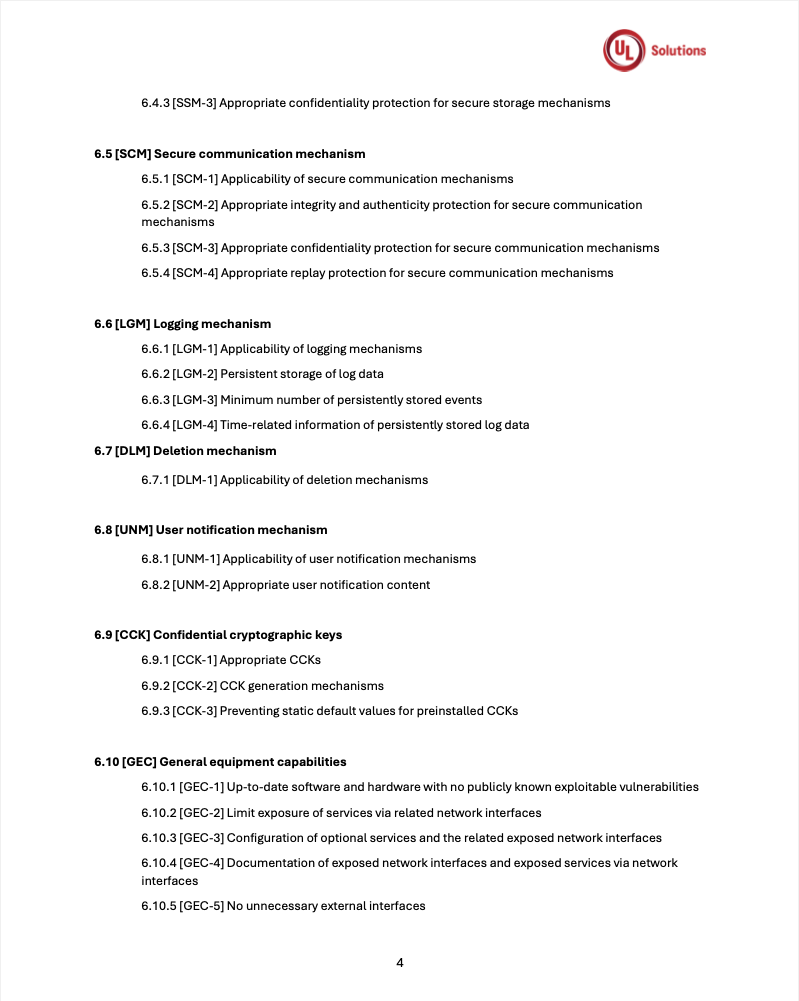

18031-2 Requirements

18031-2 is broken up into core requirements with sub requirements

18031-1 with additions of LGM, DLM, UNM, GEC-7

Replacing RLM, NMM & TCM

- 6.1 [ACM] Access control mechanism (Additions: ACM-3,4,5,6)

- 6.2 [AUM] Authentication mechanisms

- 6.3 [SUM] Secure update mechanism

- 6.4 [SSM] Secure storage mechanism

- 6.5 [SCM] Secure communication mechanism

- 6.6 [LGM] Logging mechanism

- 6.7 [DLM] Deletion mechanism

- 6.8 [UNM] User notification mechanism

- 6.9 [CCK] Confidential cryptographic keys

- 6.10 [GEC] General equipment capabilities (Additions: GEC-7)

18031-2 Organization

Building upon 18031-1, this documentation extends compliance testing to personal data processing equipment:

- Enhanced Access Control: ACM-3,4,5,6 for children's privacy protection

- Logging Mechanisms: LGM requirements for persistent log storage and time-related information

- Deletion Capabilities: DLM requirements enabling users to delete personal data

- User Notifications: UNM requirements for appropriate user notification content and timing

- Privacy-Focused Testing: Specialized procedures for personal data handling compliance

Focuses on privacy-specific protections while maintaining all core cybersecurity requirements from 18031-1.

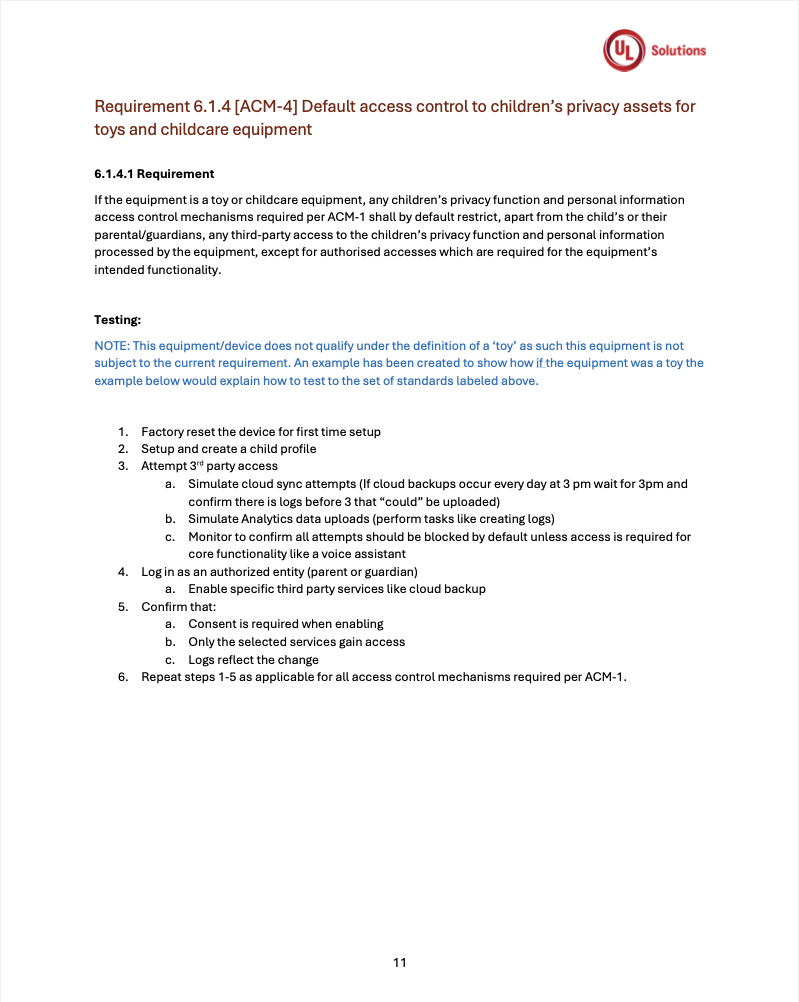

Example Requirement Breakdown

Requirement

6.1.4 [ACM-4]

Access Control Mechanisms

Conformation that no form of third-party access is possible until consent from a parent

Testing

Not all requirements in 18031-2 are applicable

Testing steps walking the tester through how they can test to the standard and mark a PASS

Note: This equipment/device does not qualify under the definition of a 'toy' as such this equipment is not subject to the current requirement. But to the benefit of the certifier if this product were to be classified as a 'toy', the guide to the left would be valid and applicable.

Key Accomplishments

- Extended 18031-1 framework to cover Personal Data Processing Equipment requirements

- Developed specialized testing procedures for children's privacy protection mechanisms

- Created comprehensive documentation for LGM, DLM, UNM, and enhanced ACM testing procedures

- When a requirement can be deemed non-applicable, testing documentation was still created to ensure clarity and understanding of the requirement's intent

Project 3: Local LLM Research & Development

The Problem

When certifying members of the team must create manual documentation from templates, this process results in:

- 5-10 hours per project writing and adjusting documentation

- Inconsistent formatting (small but can be impactful)

- Tasks that can be automated are hand completed

- Security: data cannot be shared outside the organization i.e. no external AI or collaboration tools can be used

The Solution

A completely Automated UL2900 Expert AI:

- Fine tuned local AI model on actual documentation standards

- Generates consistent, compliant docs that adhere to all relevant guidelines with minimal human intervention and adjustments

- Runs completely offline in a localized secure container (no data security concerns)

- The possibility to automate repetitive tasks saving certifiers time

Technical Implementation

Foundation Model

Qwen2.5 7B - 7B parameters for lightweight testing with structured data extraction & long context understanding capabilities.

Infrastructure

Docker→Ollama→Open WebUI - Isolated locally to be secure with full control over the deployment environment.

Training Data

4 Real Projects - Complete with project input data and expected output with document layout and format included.

Fine Tuning

Low-Rank Adaptation (LoRA) - Preserves model knowledge but adds specialized expertise through unsloth fine-tuning tool.

Deployment

Ollama & Open WebUI - Convert the model to GGUF format for ollama and run locally for testing.

Testing

Testing Prompts and Data - Comprehensive evaluation of model output quality and consistency.

LLM Development Process

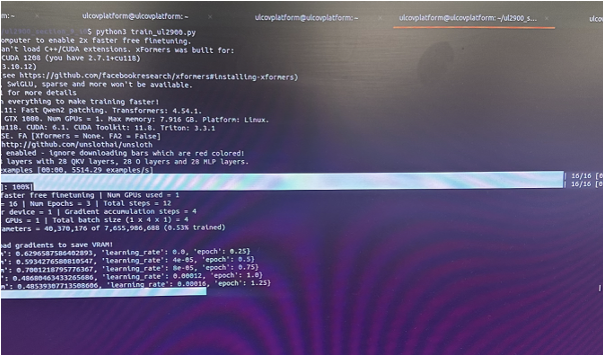

Model Fine-tune Training

The training process involved multiple epochs where the model learned from actual UL documentation standards and then corrected errors through backpropagation. The 5 lines of text shown during training represent individual epochs - complete cycles through all training data.

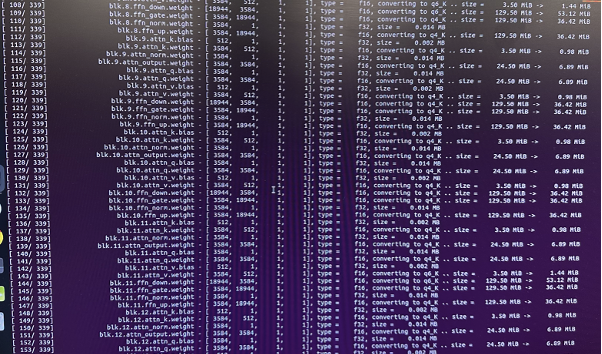

Model Quantization

Model quantization converted the trained model to a smaller, faster version for testing while maintaining accuracy. This technique reduces computational and memory requirements, allowing for faster inference and smaller model sizes suitable for local deployment.

Future Scaling Roadmap

Production-Level Enhancements

The trial run helped create a roadmap for improvement to production level implementation:

- Scale model to 14B or 32B parameters for improved accuracy and capability

- Larger training data and model refinement with more comprehensive documentation examples

- Full 2900 standard coverage expanding from the limited trial to complete standard compliance

- Extension to All Cyber standards beyond just UL2900, covering the full range of cybersecurity testing standards

- Integration with lab workflows for seamless adoption by testing personnel

Key Learning Outcomes

Technical Skills Developed

- Comprehensive understanding of EU Radio Equipment Directive compliance requirements

- Hands-on experience with fine-tuning large language models using LoRA techniques

- Practical application of Docker, Ollama, and Open WebUI for local AI deployment

- Technical documentation writing for cybersecurity testing procedures

- Experience with model quantization and optimization for production deployment

Professional Development

- Understanding of regulatory compliance in cybersecurity testing environments

- Experience working in a professional laboratory setting with industry standards

- Collaboration with cybersecurity professionals and testing engineers

- Project management skills across multiple concurrent documentation projects

- Innovation in applying AI/ML solutions to real-world enterprise challenges

Impact and Results

3

Major Projects Completed

12

Week Internship Duration

2

18031 Sections Documented

1

AI Solution Prototyped

Long-term Impact

The documentation and AI solutions developed during this internship provide lasting value to UL Solutions:

- Comprehensive testing guides that will help future lab members understand and implement 18031-1 and 18031-2 testing procedures

- Proof-of-concept for AI-assisted documentation generation, potentially reducing documentation time from 5-10 hours to significantly less

- Roadmap for scaling AI solutions across all cybersecurity testing standards, not just UL2900

- Framework for ensuring consistent, compliant documentation across all testing projects

- Potential to become internal documentation accessible to 15,000+ employees across UL Solutions, enabling company-wide learning and standardized RED compliance testing

Acknowledgments

I would like to thank the UL Solutions cybersecurity team for providing mentorship and guidance throughout this internship experience. Special thanks to my supervisors and colleagues who provided valuable feedback on documentation standards and supported the exploration of innovative AI solutions for laboratory operations.

This internship provided invaluable real-world experience in cybersecurity testing, regulatory compliance, and the practical application of artificial intelligence in enterprise environments and I would like to express my gratitude for the opportunity to contribute to such impactful projects.

Proprietary Content Notice

All images, documentation, and content on this page are proprietary materials created for UL Solutions by Coleman Pagac. This information is primarily based on presentation slides that I have been given permission to use for personal purposes.

Important: All content on this page is not for public use and cannot be reproduced, distributed, or used without express written permission from Coleman Pagac. This portfolio presentation is intended solely for demonstration of professional work experience and technical capabilities.

For licensing inquiries or permission requests, please contact: copyright@colemanpagac.com